Rotating Residential Proxies for Web Scraping in 2026 An Engineering Guide to Choosing Validating and Operating at Scale

Rotating residential proxies are easy to “make work” in a five-minute demo and hard to keep stable in production. The failure pattern is consistent: early success, then a slow slide into more 403s, more 429s, higher tail latency, and exploding retry volume that makes everything worse.

If you are building a production scraper, treat rotating residential as a reliability system, not a purchase. Your job is to prove the path, measure drift, control retries, and score providers by outcomes, not marketing. A practical starting point for rotation behavior and operating controls is Rotating residential proxies.

This guide is written for operators who care about three numbers: success rate, stability over time, and cost per successful page.

Why rotating residential proxies fail in production even when they look fine in a trial

A trial usually tests “can I fetch one page.” Production tests “can I fetch a million pages without collapsing the target or my own systems.”

Common failure mechanics you should expect:

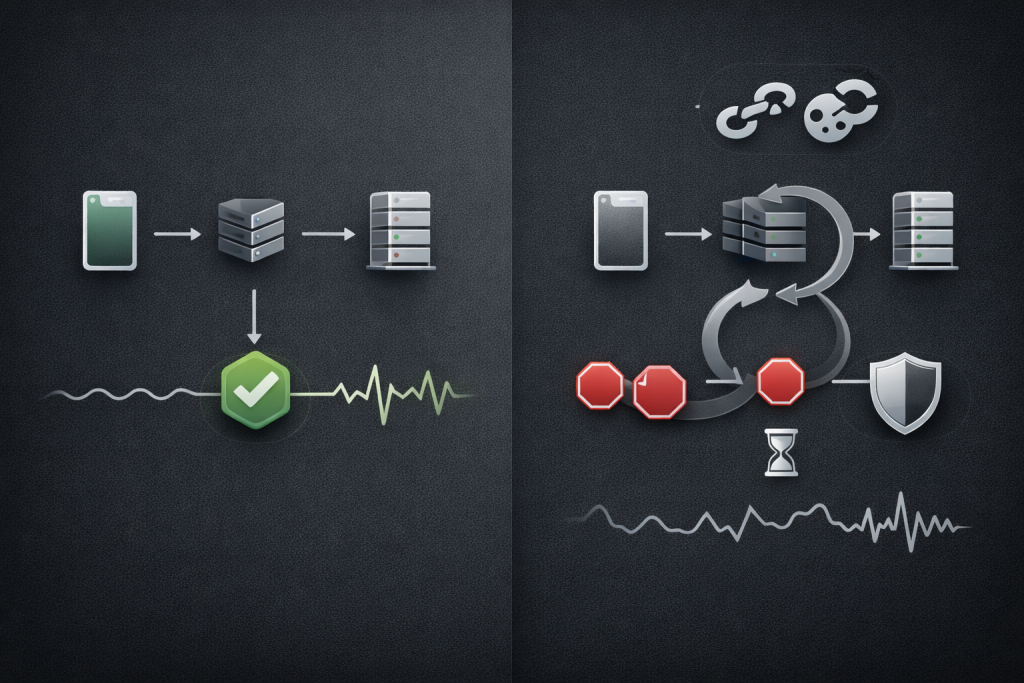

- Pool fatigue: the same exits get reused more than you think, reputation degrades, blocks trend upward.

- Retry amplification: naive retries cause burst traffic that triggers stricter rate limits and more blocks.

- Identity drift: IP rotates, but headers, cookies, and behavior do not align, creating correlation signals.

- Tail latency creep: p95 and p99 rise first; throughput collapses even if median looks fine.

Track these four metrics from day one:

- Success rate by target class (static HTML, JSON API, JS-heavy)

- Block rate split by code and challenge type

- p95 and p99 latency plus timeout rate

- Cost per successful page, not cost per GB

What rotating residential proxies change and what they do not

Rotating residential proxies change your egress network identity. They do not automatically solve correlation, behavior modeling, or compliance.

In practical terms, rotation can give you:

- Egress IP variance and geo selection

- ASN diversity, depending on the pool

- Session tools such as sticky mode and TTL-based rotation

- Basic routing separation between your worker fleet and targets

But rotation does not fix:

- Browser and TLS fingerprints

- Cookie and session coherence

- Request pacing and concurrency spikes

- Resource patterns that look like automation

Robots rules are also commonly misunderstood. Robots exclusion is guidance that crawlers are requested to honor, and the standard explicitly notes it is not access authorization. See RFC 9309 here: Robots Exclusion Protocol.

For teams building a rotation-first architecture, frame the proxy layer as a routing primitive, not a permission mechanism. When you model your system this way, it becomes obvious why you need verification gates and an evidence bundle.

A rotation overview that matches this routing-first framing is in Rotating proxies.

Quick picker choose the right rotation mode by intent and risk

Pick the rotation mode that minimizes correlation for your workflow, while keeping retries and cost predictable.

Low risk catalog crawl

- Rotation mode: per-request or short sticky TTL

- Pacing: steady, low concurrency, long-lived workers

- Goal: maximize coverage, minimize repeated hits per host

High volume price monitoring

- Rotation mode: pool pinning plus controlled per-host budgets

- Pacing: strict host-level rate control

- Goal: predictable throughput and error budgets

Login adjacent workflows

- Rotation mode: sticky sessions with explicit TTL

- Pacing: conservative cadence, strict session hygiene

- Goal: session continuity and reduced identity drift

Anti bot heavy targets

- Rotation mode: sticky with cautious pool expansion

- Pacing: slower ramps, early stop conditions on 403 and 429

- Goal: avoid trigger thresholds more than “break through” them

Stop thinking in proxy types. Start thinking in traffic shape and stop conditions.

Threat model and risk boundaries for scraping with rotating residential

Targets correlate more than IP. Your threat model should list correlation surfaces explicitly.

Correlation surfaces you should assume exist

- TLS fingerprint and connection reuse patterns

- Header sets and ordering

- Cookie churn rate and session graph structure

- Request timing, bursts, and navigation sequences

- Resource graph patterns like “only API calls with no assets”

Controls you own

- Concurrency by host and by route

- Retry policy and stop conditions

- Session strategy, including TTL and cookie isolation

- Observability: request IDs, exit sampling, and failure taxonomy

Responsible crawling signals you should respect

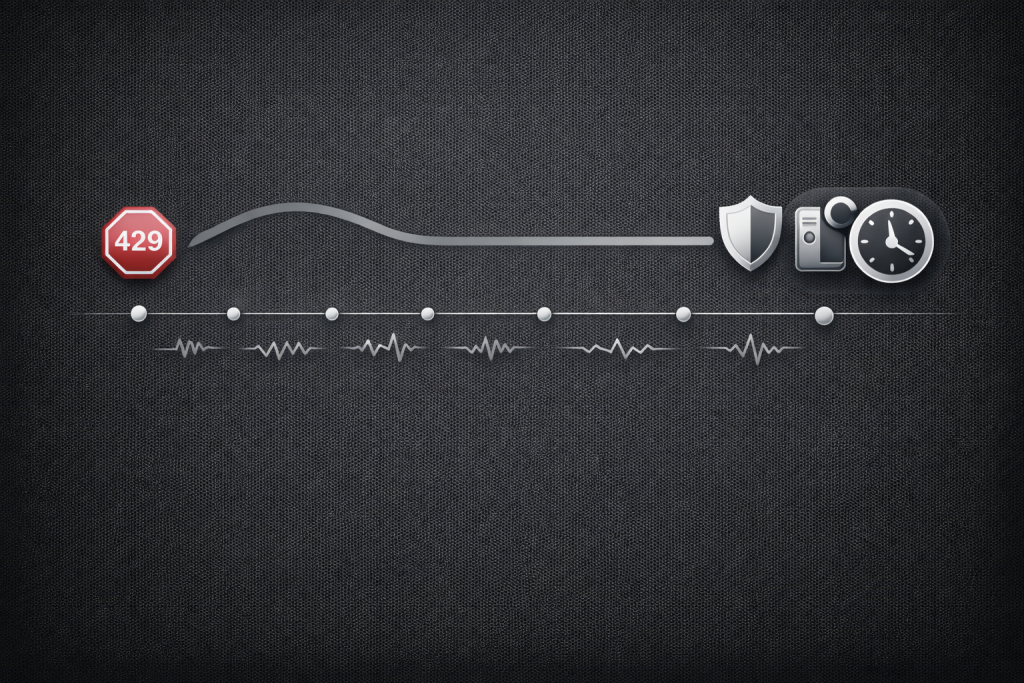

Rate limiting is commonly expressed by HTTP 429, and a response may include Retry-After to tell clients how long to wait. MDN’s reference is a clean baseline: HTTP 429 Too Many Requests. When you ignore these signals, you often create your own failure spiral.

The four verification gates prove quality before you scale

These gates are designed to be measurable, repeatable, and cheap to run.

Gate 1 routing and egress correctness

What to prove:

- Rotation behaves as configured

- Geo and ASN are consistent with your needs

What to measure:

- Unique exit IPs per 1,000 requests

- Exit reuse rate per host

- Geo mismatch rate from your verification endpoint

Failure signals:

- “Rotating” behaves like a tiny pool

- Geo drift under load

- Exit IPs disappear during peak hours

Gate 2 DNS path and leak checks

What to prove:

- DNS resolution follows your chosen design

- No hidden local resolver leakage during concurrency

What to measure:

- Resolver location consistency

- Leak rate under load tests, not single requests

Failure signals:

- Correct exit IP but DNS appears local

- Region mismatches that correlate with resolver behavior

Gate 3 block and rate limit pressure

What to prove:

- Your retry policy does not amplify rate limits

- You can classify failures into actionable buckets

What to measure:

- 403 rate, 429 rate, timeout rate

- Retry-After presence and distribution when 429 occurs

If you receive 429, treat it as pacing feedback and honor Retry-After when present. MDN’s Retry-After reference is useful for implementation details: Retry-After header.

Gate 4 soak stability under real traffic shape

What to prove:

- Stability over time, not minutes

- Success rate and latency tails do not drift upward

What to measure:

- Success rate drift over 60–180 minutes

- p95 and p99 latency drift

- Block drift after 24 hours on the same target

When you run these gates across providers, apply the same traffic shape and capture the same evidence bundle every time. This is where MaskProxy fits naturally: you evaluate it the same way you evaluate everything else, and keep only what passes the gates and holds under soak.

A short overview of proxy types and operational expectations is in Residential proxies.

Lab 1 measure rotation reality identity drift and pool health

Goal: prove rotation behavior and quantify pool health with a repeatable test.

Steps

- Pick a verification endpoint that returns:

- observed IP

- request headers

- approximate geo hints if available

- Run three modes:

- per-request rotation

- sticky session with 10-minute TTL

- sticky session with 60-minute TTL

- Collect for each request:

- timestamp

- status code

- latency

- exit IP

- a stable request ID you generate

Minimal workflow

- Run N=1,000 requests per mode

- Use concurrency 5 to 20 depending on target risk

- Add a 1–3 second randomized sleep between requests to avoid burst patterns

Expected signals

- Per-request rotation should show high IP churn but still some reuse

- Sticky should show stable exit until TTL, then a controlled shift

- If sticky changes frequently, session control is unreliable

Lab 2 rate limit safe retries backoff with jitter and stop conditions

Goal: prevent self-sabotage from naive retries.

Retry rules you should implement

- If 429 includes Retry-After, honor it before retrying.

- Otherwise use exponential backoff with jitter and cap the backoff.

- Add stop conditions:

- stop the route if 403 or 429 rises above a threshold

- stop if timeout rate spikes

- stop if p99 latency doubles

Why jitter matters: if many workers retry at the same time, you create synchronized bursts that worsen congestion. AWS documents the concept clearly: Exponential Backoff and Jitter.

Metrics

- Retries per successful page

- Total backoff time spent per 1,000 successes

- Reduction in repeated 429 bursts after adding jitter

If you want to communicate this policy internally, don’t call it “retry logic.” Call it “rate-limit compliance and damage control.”

Provider evaluation rubric score tradeoffs instead of chasing marketing claims

This is how you beat listicles: you give readers a scoring method they can apply to any provider.

Score providers on outcomes, with explicit weights:

- Delivery: success rate by target class

- Pool quality: exit reuse rate, failure clustering, drift over time

- Control plane: sticky TTL controls, geo options, session policies

- Performance: p95 and p99 latency, timeout rate, variance during peak hours

- Observability: exit visibility, request IDs, error taxonomy, support diagnostics

- Commercial: cost per successful page, predictability under scale

- Trust signals: sourcing transparency, abuse handling, documented policies

Run a fair bake-off:

- same target set

- same request mix

- same traffic shape

- same time window

- same evidence bundle format

If you need a consistent way to reason about HTTP versus SOCKS and where you terminate DNS and TLS, align your rubric with protocol choices. A compact reference for that layer is Proxy protocols.

Troubleshooting flow symptom to likely cause to first fix

Use this structure in incident response: symptom, likely cause, first fix, then what to measure next.

- Symptom: 429 spikes with Retry-After present

Likely cause: host budget exceeded, retry storm, missing wait compliance

First fix: honor Retry-After, reduce concurrency, add jittered backoff

Measure next: retries per success, 429 distribution by host - Symptom: 403 spikes without 429

Likely cause: reputation threshold, fingerprint mismatch, request graph anomalies

First fix: slow ramp, reduce request similarity, widen pool cautiously

Measure next: 403 clustering by exit IP and path - Symptom: Geo drift under load

Likely cause: pool substitution, failover into different regions, DNS mismatch

First fix: pin region, lower concurrency, validate geo per route

Measure next: geo mismatch rate per 1,000 requests - Symptom: Correct exit IP but content localized wrong

Likely cause: DNS path leakage or upstream resolver behavior

First fix: change DNS strategy, verify resolver behavior under concurrency

Measure next: resolver location consistency - Symptom: Day 1 stable Day 3 collapses

Likely cause: pool fatigue, retries increasing background load, slow reputation decay

First fix: introduce soak gates, rotate routes, enforce stop conditions

Measure next: drift curves for success rate and tail latency - Symptom: p99 latency explodes while success rate looks okay

Likely cause: congested exits, overloaded subnets, retries compounding

First fix: cap concurrency, move to healthier pool, reduce timeouts

Measure next: p95 and p99 and timeout rate by exit group

Evidence bundle checklist what to capture so your results are credible

Capture this every time you test a route or a provider:

- Request mix definition and time window

- Status histogram for 2xx, 3xx, 403, 429, 5xx, and timeouts

- Retry counts and backoff totals

- Exit IP samples and estimated reuse rate

- p50, p95, p99 latency and timeout rate

- 10 to 30 raw failure examples with timestamps and exit samples

- Configuration snapshot: rotation mode, session TTL, concurrency, pacing

This evidence bundle is the difference between “it feels better” and “it is better.”

EEAT disclosure how this guide was built and how to trust it

This guide is built from production scraping reliability practices: measurable gates, controlled traffic shapes, and repeatable experiments that separate routing quality from retry mistakes.

Methodology:

- Four verification gates that you can rerun on any provider

- Two labs that quantify rotation reality and retry safety

- Evidence bundles so results are comparable over time

For search intent alignment, Google’s guidance on helpful, reliable, people-first content is a solid standard for what should be on the page and why: Creating helpful reliable people-first content.

Summary the decision you should make before you spend more on IPs

- Pick rotation mode by intent and session needs, not by habit.

- Validate with the four gates before scaling traffic.

- Treat 429 as pacing feedback and honor Retry-After.

- Use jittered backoff and stop conditions to prevent retry storms.

- Score providers by measurable outcomes, not feature checklists.

- Keep evidence bundles so you can compare routes week over week.

If you want cost predictability in the same evidence-driven way, keep pricing analysis attached to your cost-per-success metric, not your bandwidth metric. You can map that cleanly to Rotating Residential Proxies Pricing.

Daniel Harris is a Content Manager and Full-Stack SEO Specialist with 7+ years of hands-on experience across content strategy and technical SEO. He writes about proxy usage in everyday workflows, including SEO checks, ad previews, pricing scans, and multi-account work. He’s drawn to systems that stay consistent over time and writing that stays calm, concrete, and readable. Outside work, Daniel is usually exploring new tools, outlining future pieces, or getting lost in a long book.

FAQ

1.Do rotating residential proxies guarantee I will not be blocked

No. Rotation changes egress IP. Blocks are triggered by multiple signals, including behavior, request graph, and correlation across sessions.

2.Should I always use per request rotation

No. Use sticky sessions for workflows that require continuity. Use per-request when you need coverage and you are hitting per-IP rate limits.

3.Is 429 a ban

Usually it is rate limiting. Treat it as a pacing signal, honor Retry-After when present, and reduce concurrency.

4.What is the fastest way to tell if a pool is small

Measure exit reuse rate across 1,000 requests, and check whether failures cluster on a small set of exits.

5.Why does success rate drop after a day or two

Pool fatigue and retry amplification are common causes. Soak tests and stop conditions catch this early.