Datacenter Proxies Validation Playbook for Scraping and Monitoring

Datacenter proxies are often the fastest path to predictable egress for scraping, monitoring, price intelligence, and QA. The problem is that “datacenter proxies” can mean anything from dedicated IPs with stable performance to shared pools that look fine in a single curl test and collapse under concurrency.

This guide is a production evaluation playbook. You will define measurable acceptance criteria, run verification gates with commands and expected signals, score plans with a rubric, and catch free-plan traps before you waste time or ship risk. For a baseline reference on common datacenter proxy options and usage patterns, see Datacenter Proxies.

What datacenter proxy servers are and where they win

A datacenter proxy server is an IP address hosted in a cloud or colocation environment. Teams choose them for:

- Speed and predictable latency

- Clear operational controls such as authentication and stable endpoints

- Cost efficiency for high-volume workloads

They tend to win in:

- Price monitoring and catalog crawling with low-to-medium bot defense

- Uptime checks and synthetic monitoring where latency jitter triggers noisy alerts

- QA environments where repeatability matters

- Bulk API polling where disciplined pacing keeps you stable

They tend to lose in:

- Session-heavy account workflows with strict fraud controls

- Targets that aggressively block hosting ASNs

- Use cases where end-user locality must match a true residential footprint

Practical takeaway: treat datacenter proxies like any other production dependency. If you cannot measure success rate, latency percentiles, and block signals under load, you are guessing.

Free datacenter proxies vs public free proxy lists

“Free” usually means one of two things.

Provider free plans:

- Documented endpoints and authentication

- Predictable limits you can test and monitor

- Reasonable for a controlled datacenter proxy free trial

Public free proxy lists:

- Unknown operators and interception risk

- High churn and inconsistent performance

- Not suitable for anything that touches credentials or private data

Safety baseline for any free testing:

- Never send credentials, cookies, API keys, or personal data through unknown proxy endpoints

- Use throwaway test targets and non-sensitive endpoints

- Log only what you must, and redact proxy credentials immediately

- Segment test traffic so it cannot contaminate production data or monitoring

For proxy authentication semantics and status codes, use the normative definition in RFC 7235 and the operational reference for HTTP 407.

Define requirements before you test

Write this down first, or every benchmark result will be hard to interpret.

Target profile

- Target category: SERP, e-commerce, travel, public APIs, content sites

- Bot-defense level: low, medium, high

- Request types: HTML pages, JSON APIs, assets, headless browser traffic

- Success definition: “200 OK with correct content,” not just “TCP connected”

Constraints

- Geo requirements: country, region, city

- Protocol: HTTP, HTTPS CONNECT, SOCKS5

- Auth method: IP allowlist or user-pass

- Concurrency target: peak parallel requests

- Volume: requests per day and bandwidth per day

Metrics that matter

- Success rate overall and per target class

- p50 and p95 latency and jitter

- Timeout rate

- Block page and CAPTCHA rate

- Retry amplification: how many attempts per successful request

Acceptance criteria with pass and fail thresholds

These thresholds are conservative. Tighten them for SLA monitoring and relax them for exploratory crawling.

Reliability gates

- Success rate: at least 97% on low-risk targets; at least 92% on medium-risk targets

- Timeout rate: at most 1% sustained during steady-state

- Retry amplification: at most 1.3x over a steady-state window

Performance gates

- p95 latency ceiling: define per target class and enforce under load

- Jitter control: p95 should not exceed about 2x p50 after warm-up

Stability gates

- Sticky behavior: exit IP and behavior should remain consistent in sticky tests

- Churn control: rotation should not create large error spikes at your intended concurrency

- Peak-hour resilience: performance should not collapse during predictable busy windows

Security and correctness gates

- Authentication failures must be explainable and reproducible

- No unexpected header injection or TLS quirks that break your client stack

Verification plan with commands and expected signals

Run four gates. Do not skip Gate 1. Most “proxy problems” are client configuration errors.

Gate 1: Connectivity and authentication

If the proxy rejects your credentials, you will often see 407 Proxy Authentication Required and a challenge header that indicates the expected auth scheme. For a concise explanation of proxy challenge behavior, see MDN Proxy-Authenticate Header.

Curl checklist:

- Confirm the proxy is actually being used

- Confirm credentials are accepted

- Confirm you can reach an HTTPS target through CONNECT

# 1) Show whether a proxy is in play and what happens on failure

curl -v -x http://PROXY_HOST:PROXY_PORT https://example.com/

# 2) Proxy auth with user:pass

curl -v -x http://PROXY_HOST:PROXY_PORT --proxy-user "USER:PASS" https://example.com/

# 3) Exit IP check (replace with a safe IP echo endpoint you control)

curl -sS -x http://PROXY_HOST:PROXY_PORT --proxy-user "USER:PASS" https://api.ipify.org

Expected signals:

- Wrong creds or wrong auth mode produces 407 responses

- Correct creds produces stable success and stable exit IP for non-rotating tests

- Sudden timeouts at Gate 1 usually indicate an unhealthy endpoint, upstream blocks, or DNS/connectivity issues

If you need a reliable reference for curl proxy flags and edge cases, use Everything curl Proxy Guide.

Gate 2: Protocol support and DNS expectations

Your toolchain must match the proxy protocol. Mixing HTTP proxy settings with SOCKS endpoints produces misleading results, especially around DNS behavior.

SOCKS5 is commonly used when you want explicit control over DNS resolution behavior in your client stack. If you need a quick reference for the typical modes teams rely on, see SOCKS5 Proxies.

DNS sanity checks:

- If you expect proxy-side DNS, ensure your client is configured for it

- Verify hostnames resolve consistently with your risk model and geo expectations

# SOCKS5 with remote DNS (note the "h" in socks5h)

curl -v --socks5-hostname PROXY_HOST:PROXY_PORT https://example.com/

Expected signals:

- Consistent geo signals across repeated runs

- No resolver mismatch that violates your expectations

Gate 3: Rate limits, blocks, and reputation signals

When you see 429 Too Many Requests, treat it as a pacing problem first, not a proxy problem. The status code is defined in RFC 6585, and many targets use Retry-After as a hint for how long to wait.

What to record:

- Status breakdown: 200, 3xx, 4xx, 5xx

- 403, 401, CAPTCHA, and block pages as separate counters

- 429 rate and whether

Retry-Afterappears - Timeout and connect error rate

- Content correctness checks for “200 OK but wrong page” scenarios

IP reputation check approach:

- Sample a small set of exits

- Test low-risk endpoints you control plus representative targets

- Track ban rate and CAPTCHA rate per subnet, not just per IP

Expected signals:

- Healthy pool shows stable success rate, predictable 429 behavior during ramp, and consistent latency

- Risky pool shows blocks on first contact, high variance, unstable latency, and rapid churn

Gate 4: Load test without burning your trial

Most free plans fail under concurrency, not under single-request curl tests.

Ramp protocol:

- Warm-up: 1–2 minutes at low concurrency

- Ramp: increase every 60–90 seconds, for example 5 → 10 → 20 → 40

- Steady-state: hold at target concurrency for 5–10 minutes

- Cool-down: drop to low concurrency and observe recovery

Code-heavy benchmark runner in Python

Use this to produce repeatable numbers: success rate, latency percentiles, and error breakdown. Keep targets safe during testing.

import asyncio

import time

from dataclasses import dataclass

from typing import Dict, List, Optional, Tuple

import httpx # pip install httpx

@dataclass

class Result:

ok: bool

status: Optional[int]

latency_ms: float

error: Optional[str]

def percentile(values: List[float], p: float) -> float:

if not values:

return float("nan")

values = sorted(values)

k = int(round((len(values) - 1) * p))

return values[k]

async def fetch(client: httpx.AsyncClient, url: str) -> Result:

t0 = time.perf_counter()

try:

r = await client.get(url, timeout=15.0, follow_redirects=True)

dt = (time.perf_counter() - t0) * 1000

return Result(ok=(200 <= r.status_code < 300), status=r.status_code, latency_ms=dt, error=None)

except Exception as e:

dt = (time.perf_counter() - t0) * 1000

return Result(ok=False, status=None, latency_ms=dt, error=type(e).__name__)

async def run_batch(

url: str,

total_requests: int,

concurrency: int,

proxy_url: str,

) -> Tuple[List[Result], float]:

sem = asyncio.Semaphore(concurrency)

results: List[Result] = []

async with httpx.AsyncClient(

proxies=proxy_url,

headers={"User-Agent": "dc-proxy-benchmark/1.0"},

) as client:

async def worker():

async with sem:

res = await fetch(client, url)

results.append(res)

t0 = time.perf_counter()

await asyncio.gather(*[worker() for _ in range(total_requests)])

elapsed = time.perf_counter() - t0

return results, elapsed

def summarize(results: List[Result], elapsed_s: float) -> None:

lat = [r.latency_ms for r in results if r.status is not None]

ok = sum(1 for r in results if r.ok)

total = len(results)

by_status: Dict[str, int] = {}

for r in results:

key = str(r.status) if r.status is not None else f"ERR:{r.error}"

by_status[key] = by_status.get(key, 0) + 1

print(f"Total: {total} OK: {ok} Success rate: {ok/total:.2%}")

print(f"Elapsed: {elapsed_s:.2f}s RPS: {total/elapsed_s:.2f}")

print(f"Latency ms p50: {percentile(lat, 0.50):.0f} p95: {percentile(lat, 0.95):.0f} p99: {percentile(lat, 0.99):.0f}")

print("Status breakdown:", dict(sorted(by_status.items(), key=lambda kv: kv[1], reverse=True)))

if __name__ == "__main__":

URL = "https://example.com/" # replace with a safe endpoint

PROXY = "http://USER:PASS@PROXY_HOST:PROXY_PORT" # replace with your proxy

results, elapsed = asyncio.run(run_batch(URL, total_requests=200, concurrency=20, proxy_url=PROXY))

summarize(results, elapsed)

Interpretation checklist:

- A good plan keeps p95 stable as concurrency rises

- If 429 climbs, reduce concurrency, add backoff with jitter, and cap retries

- If 407 appears, fix auth configuration before changing anything else

Dedicated vs shared, static vs rotating, and what to test differently

Dedicated datacenter proxies reduce noisy-neighbor effects. Shared pools can be cost-effective, but you must score them under load.

Static proxies are a good fit when:

- You need allowlisting at the target

- You need stable sessions for long-running crawls

- You want consistent IP reputation over time

Rotating datacenter proxies are a good fit when:

- You need distribution across requests

- You can tolerate churn

- You are fighting simple per-IP rate caps

For rotation-specific knobs and common patterns teams depend on, Rotating Datacenter Proxies is a practical reference point when you map “rotation settings” to measurable outcomes.

Rotation test checklist:

- Rotation cadence is controllable and observable

- Churn does not explode error rates

- Subnet concentration does not exceed your target tolerance

- Pool does not collapse during peak hours

Scoring rubric for providers or plans

Use a rubric so “fast in a demo” does not beat “stable under load.”

Suggested weights:

- Network performance: 25%

- Reliability under workload: 25%

- IP quality and block resistance: 20%

- Control surface and features: 15%

- Observability and operations: 10%

- Trial clarity and commercial risk: 5%

What to measure:

- Network performance: p50 and p95 latency, jitter, throughput at fixed concurrency

- Reliability: success rate, timeouts, retry amplification

- IP quality: block rate, CAPTCHA rate, churn, subnet diversity

- Control surface: auth options, sticky sessions, rotation controls, geo targeting

- Observability: logs, usage counters, dashboards, incident transparency

- Commercial risk: bandwidth cap, concurrency cap, target restrictions, billing surprises

MaskProxy should clear the same gates as any other provider: measurable evidence beats marketing promises every time.

Scaling rules for scraping and monitoring

Scaling is where most datacenter proxy failures show up.

Pooling strategy

- Split pools by target and risk tier

- Do not let one hot target poison your entire pool

- Keep separate pools for monitoring and crawling

If you need stability for allowlisted targets or long sessions, Static Datacenter Proxies is the model to benchmark against when you translate “stability” into churn rate, timeout rate, and peak-hour variance.

Pacing and retry discipline

- Use backoff with jitter

- Cap retries per request and cap total retry budget

- Add circuit breakers when 429 or timeouts surge

Isolation

- Isolate by job type: SERP, product pages, APIs

- Isolate by request footprint: HTML vs assets vs API calls

- Isolate by auth mode: user-pass pools vs allowlisted pools

Change checklist

- After any change, re-run Gate 1 and Gate 4

- Re-validate p95 latency and success rate at the same concurrency

- Re-check 407 and 429 rates before increasing throughput

Free trial traps and free plan limits checklist

Use this checklist before you invest engineering time.

- Hidden caps: bandwidth, request count, concurrency, session limits

- Geo restrictions: limited countries, limited cities, random assignment

- Rotation limitations: rotation gated to paid tier, slow refresh, small pool

- Target restrictions: blocked categories, silent throttling, fair-use shaping

- Operational gotchas: peak-hour collapse, shared pool contention, no replacements

- Billing gotchas: auto-renew, auto-upgrade, unclear overage pricing

Testable rule: any limit that cannot be observed in metrics is risk. Treat it as a failure until proven otherwise.

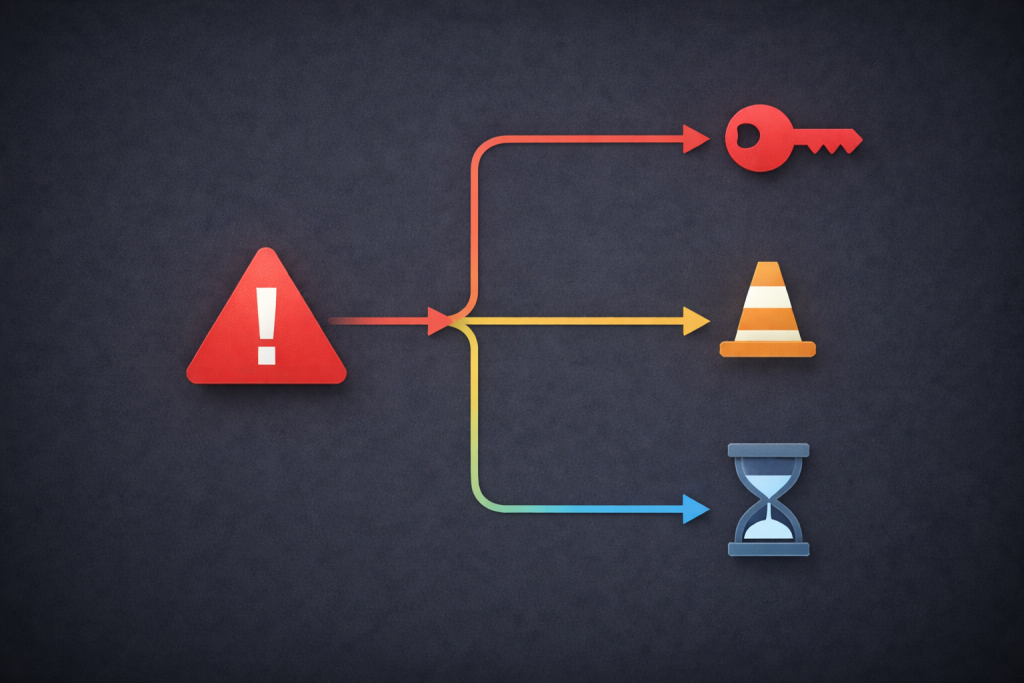

Common failure patterns with fast triage steps

- Symptom: frequent 407 responses | Likely cause: auth mismatch | First fix: verify proxy credentials and auth method

- Symptom: rising 429 at modest concurrency | Likely cause: rate limiting | First fix: reduce concurrency, honor Retry-After when present, add backoff

- Symptom: timeout spikes during ramp | Likely cause: dead exits or contention | First fix: health checks, eviction, split pools

- Symptom: stable connect but wrong content | Likely cause: soft blocks | First fix: detect block pages, adjust pacing, segment exits

- Symptom: high variance by time of day | Likely cause: shared pool congestion | First fix: benchmark peak windows, consider dedicated or static

Daniel Harris is a Content Manager and Full-Stack SEO Specialist with 7+ years of hands-on experience across content strategy and technical SEO. He writes about proxy usage in everyday workflows, including SEO checks, ad previews, pricing scans, and multi-account work. He’s drawn to systems that stay consistent over time and writing that stays calm, concrete, and readable. Outside work, Daniel is usually exploring new tools, outlining future pieces, or getting lost in a long book.

FAQ

What are datacenter proxies best for

High-volume scraping, monitoring, price intelligence, and QA where speed and repeatability matter.

Dedicated vs shared datacenter proxies

Dedicated is usually more stable; shared is cheaper but often higher variance under concurrency.

Static vs rotating proxies

Static for allowlists and long sessions; rotating for distribution and per-IP rate caps.

How to test datacenter proxies before buying

Run four gates: auth/connectivity, protocol/DNS, block and 429 behavior, then a controlled ramp benchmark.

Why you see 407 Proxy Authentication Required

Your proxy credentials or auth mode is wrong, or your client is not sending proxy auth correctly.

What 429 Too Many Requests means in tests

You’re being rate limited; slow down, add backoff, cap retries, and re-check IP reputation if it persists.