Rotating Residential Proxies Evaluation Playbook for Web Scraping in 2026

Rotating residential proxies can turn a fragile scraping pipeline into something that survives real-world rate limits, geo gating, and IP reputation decay. They can also become a budget sink if you only test “day one traffic” and never validate session behavior, pool quality, and cost per successful outcome.

This is not a “top providers” list. It’s an evaluation playbook you can run in a day: a decision framework, measurable tests, a scoring rubric, and a cost model that converts vendor claims into cost per 1,000 successful requests. When you define requirements and acceptance criteria, it helps to anchor your thinking to a concrete control surface such as Rotating Residential Proxies, then validate every claimed behavior under your own traffic shape.

Why rotating residential proxy projects fail even with huge IP pools

Most proxy failures are not outages. They are mismatches between workload shape and proxy behavior.

Common failure modes:

- You rotate IPs but keep the same detectable automation fingerprint, so correlation still happens.

- Your retry policy silently triples traffic, so “success rate” looks fine while cost explodes.

- The trial performs at low concurrency, then hits a quota cliff under ramp and soak.

- You assumed sticky sessions, but the session lifetime is shorter than your job cadence.

- Your logs can’t tell whether you’re seeing blocks, throttling, or challenge pages.

If you cannot explain your own errors, you cannot evaluate a proxy.

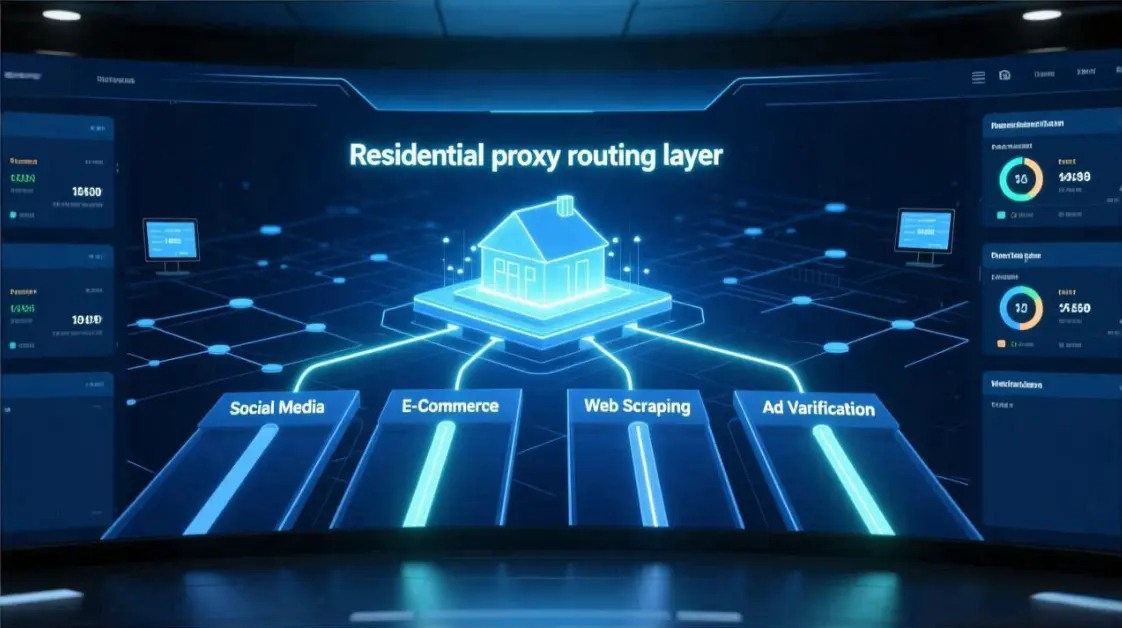

What rotating residential proxies solve and what they do not

Rotating residential proxies solve:

- Identity distribution across a residential IP pool

- Geo-targeted routing for localized content access

- Rate-limit relief when per-IP throttling is the dominant defense

They do not solve:

- Poor pacing and bursty retries

- Fingerprint correlation in browser-based scraping

- Session integrity for multi-step flows

- Compliance and policy misalignment

Keep the category boundary clear: rotating residential is a subset of Rotating Proxies, but your success depends more on pacing, session design, and observability than on “rotation” as a buzzword.

Decision framework to choose the right proxy type

Rotating residential proxies are usually a good fit when:

- Requests are mostly stateless and independent

- You need broad country coverage and localized results

- Targets are sensitive to datacenter ranges and ASN reputation

- You can tolerate higher latency variance in exchange for better reach

They are high risk when:

- You scrape session-heavy funnels such as login, checkout, or inbox-like flows

- You require long-lived identity continuity across minutes to hours

- Targets escalate quickly to browser fingerprinting and behavioral detection

In high-throughput, tolerant-target cases, it can be cheaper and simpler to start with Datacenter Proxies and switch to residential rotation only where blocks prove it’s necessary.

The metrics that actually decide success

Do not evaluate by “IP pool size.” Evaluate by outcomes that survive marketing.

Metric: Success rate | Why it matters: predicts usable yield | How to measure: count 2xx with valid content, exclude your own parsing failures

Metric: Block rate | Why it matters: shows detection escalation | How to measure: track 401, 403, and known block-body signatures

Metric: Throttle rate | Why it matters: reveals pacing mismatch | How to measure: track HTTP 429 Too Many Requests and honor Retry-After

Metric: p95 latency | Why it matters: determines throughput and timeout budget | How to measure: steady-state timing histograms

Metric: Geo accuracy | Why it matters: wrong geo breaks localized scraping | How to measure: geo-check endpoints plus target-side locale signals

If you want your client to behave consistently across targets, it’s worth aligning your throttling logic with the IANA HTTP Status Code Registry so your taxonomy stays stable as you scale.

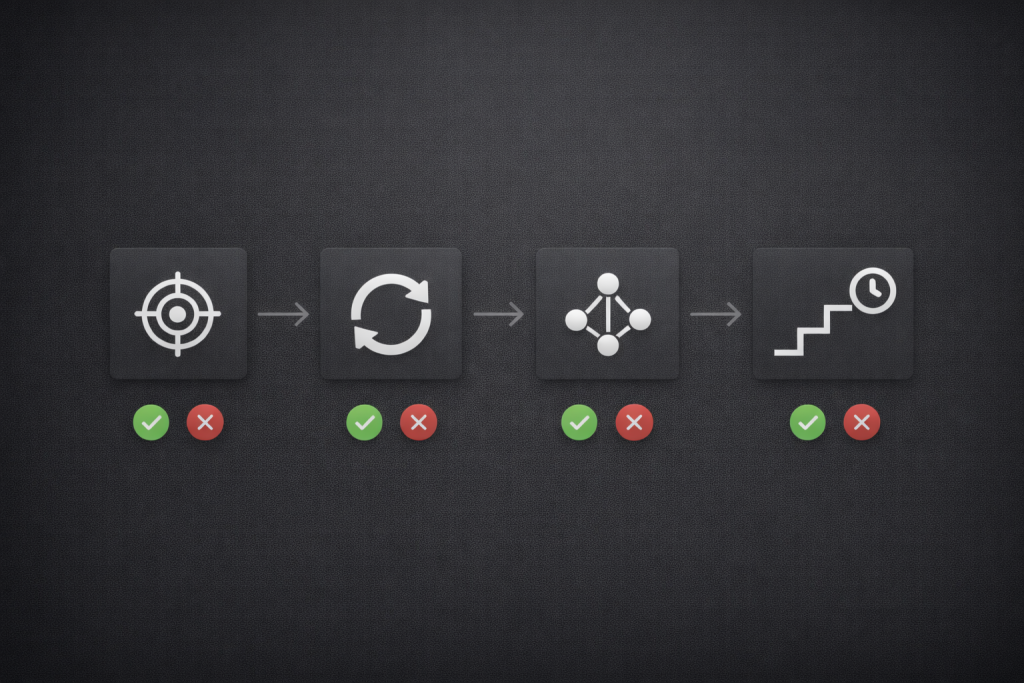

The executable test plan you can run in one day

Step 1: Define the target profile and request budget

Write down what you are actually trying to scrape:

- Target endpoints, response types, and whether JS rendering is required

- Required countries and any city-level constraints

- Expected steady-state concurrency and burst behavior

- Timeout and retry policy, including max retry budget per request

Baseline without proxies:

- Run a small sample from a known-clean network

- Capture examples of success, soft blocks, hard blocks, and challenge pages

Step 2: Verify rotation and session behavior

Your goal is to prove what the proxy system does under your cadence.

Checklist:

- Confirm the exit IP changes when you expect it to change

- Confirm sticky sessions keep the same exit IP for the time window you require

- Confirm what happens after idle time and reconnect storms

- Confirm that geo-targeted routing remains stable under concurrency

Treat session behavior as a contract test. If it is not reproducible, it is not an operational feature.

Step 3: Pool quality and contamination checks

You want to detect “burnt” segments early.

Checklist:

- Sample 200–500 requests across your main geos

- Track reuse rate: how often the same exit IP reappears

- Track collision rate: how often concurrent workers share an exit IP

- Track ASN clustering if the target is reputation-sensitive

- Track geo drift: requested geo vs observed geo

Pseudo-table:

Signal: Rising collisions at higher concurrency | Likely cause: small effective pool | First measurement: exit-IP uniqueness per worker

Signal: Stable 2xx but wrong content locale | Likely cause: geo mismatch | First measurement: geo-check sampling plus locale hints

Step 4: Concurrency ramp and soak

Most trials “work” because nobody runs the real traffic shape.

Ramp plan:

- Warm-up for 5 minutes at low concurrency

- Ramp in steps (1x → 3x → 10x) with jitter

- Soak at expected peak long enough to expose quota cliffs

- Cool down and confirm recovery behavior

Pass/fail gates you should define before running:

- Minimum success rate at steady state for each target class

- Maximum acceptable throttle rate and block rate drift

- Maximum p95 latency relative to your pipeline budget

- Rollback triggers: sudden CAPTCHA spike, geo drift, or error taxonomy change

Scoring rubric you can apply to any provider

Use a weighted rubric so your choice matches your workload, not a marketing page.

Score each category from 0–5:

- Reliability under load: 25%

- Control and session behavior: 15%

- Geo accuracy and coverage: 15%

- Latency consistency: 10%

- Observability and debugging: 15%

- Support and incident handling: 10%

- Compliance transparency: 10%

Definitions that keep scoring honest:

- A “5” in control means predictable session windows, stable geo routing, and reproducible identity behavior.

- A “5” in observability means per-request metadata and an error taxonomy you can use to isolate failure layers.

- A “5” in compliance means clear sourcing explanations and enforceable acceptable-use boundaries.

If you’re comparing vendors, write the rubric once, then score every provider the same way. Teams often find it easier to operationalize requirements when they can map controls to concrete behavior (MaskProxy is one example where the control expectations can be expressed in operational terms), but the scoring logic should remain provider-agnostic.

Cost model that prevents surprise bills

Compute cost per 1,000 successful requests. Everything else is a proxy for that outcome.

Pseudo-table:

Metric: Cost per 1,000 successful requests | Why it matters: aligns spend to usable yield | How to measure: total cost / successes × 1000

Metric: Retry multiplier | Why it matters: retries silently inflate cost | How to measure: total attempts / total successes

Metric: Render multiplier | Why it matters: JS rendering changes economics | How to measure: marginal cost and latency of rendered vs raw HTTP

To compare pricing across vendors, normalize inputs into the same unit. Using a reference like Rotating Residential Proxies Pricing helps you structure the CP1K math so you can swap in any provider’s numbers without changing the model.

Free trial and onboarding traps

Trials are designed to start smooth. Your tests must be designed to break.

Traps to watch:

- Concurrency caps that only appear after day one

- Trial pools that differ from paid pools in quality or contamination

- Premium geo flags that are quietly restricted

- Sticky sessions that expire faster than your job cadence

- Support and SLA exclusions during trials

Your rule should be simple: no vendor “passes” without ramp and soak, and no vendor “wins” without a CP1K estimate.

Failure patterns and fast fixes

Symptom: Success rate drops only at higher concurrency | Likely cause: hidden caps or pool collisions | First fix: lower ramp step size and add jitter

Symptom: Throttle spikes with 429 and Retry-After | Likely cause: pacing mismatch | First fix: honor Retry-After and implement adaptive backoff

Symptom: CAPTCHA rate climbs while status codes stay 200 | Likely cause: challenge pages | First fix: detect challenge HTML and isolate fingerprint vs IP changes

Symptom: Geo drift shows up mid-soak | Likely cause: unstable geo routing | First fix: geo-check sampling and route quarantine

Symptom: Sessions break mid-flow | Likely cause: rotation during session | First fix: switch to sticky sessions or redesign for fewer identity changes

Isolation principle: first prove whether the failure is IP reputation, pacing, fingerprint, or target change.

Compliance and ethics in practical terms

Residential traffic deserves extra scrutiny.

Practical guardrails:

- Ask vendors to explain sourcing and consent plainly

- Minimize collection of sensitive data, and keep audit logs for automation

- Align your activity with internal policy and target-site rules

If you want a defender’s view of how sites classify automation and why defenses escalate beyond IP reputation, OWASP’s Automated Threats to Web Applications is a useful reference. For browser automation implementations, the W3C WebDriver standard is the baseline many stacks build on.

Operational checklist for production

Rotation policy defaults:

- Stateless endpoints: rotate per request, add jitter, cap retries

- Soft sessions: sticky sessions for 5–15 minutes, rotate between tasks

- Hard sessions: consider static identity routing and longer-lived pools

Observability checklist:

- Log request ID, target, geo requested, geo observed, exit IP, status, bytes, latency, error class

- Dashboard success rate, throttle rate, CAPTCHA rate, p95 latency, CP1K

- Alert on sustained success drops, throttle drift, geo drift, and sudden error-shape changes

Incident response checklist:

- Rollback switch to safer routing and lower concurrency

- Quarantine suspect pool segments

- Preserve a small sanitized evidence bundle for vendor support

Conclusion and next actions

If you want to choose rotating residential proxies with confidence, do three things: define your target profile, run ramp-and-soak tests with measurable gates, and compute CP1K so cost is tied to success. When your workload is session-heavy or identity continuity matters more than rotation, Static Residential Proxies

Daniel Harris is a Content Manager and Full-Stack SEO Specialist with 7+ years of hands-on experience across content strategy and technical SEO. He writes about proxy usage in everyday workflows, including SEO checks, ad previews, pricing scans, and multi-account work. He’s drawn to systems that stay consistent over time and writing that stays calm, concrete, and readable. Outside work, Daniel is usually exploring new tools, outlining future pieces, or getting lost in a long book.

FAQ

How many rotating residential IPs do I need for web scraping?

Start from concurrency and collision rate. Increase pool size only when collisions and throttles rise under soak.

Should I rotate every request or use sticky sessions?

Rotate per request for stateless scraping. Use sticky sessions for multi-step flows that break when identity changes.

What is a realistic success rate target?

Set it per target class. Easy public pages can be very high; hardened targets require trade-offs against CP1K.

How do I compare bandwidth pricing with credit pricing?

Normalize both to cost per 1,000 successful requests using your own retry multiplier and average payload sizes.

Why did my trial work on day one but fail later?

Because you didn’t run ramp and soak with production-like concurrency, retries, and time windows.

How do I reduce compliance risk with residential proxies?

Prefer clear consent-based sourcing, minimize sensitive data collection, and log automation for internal review.